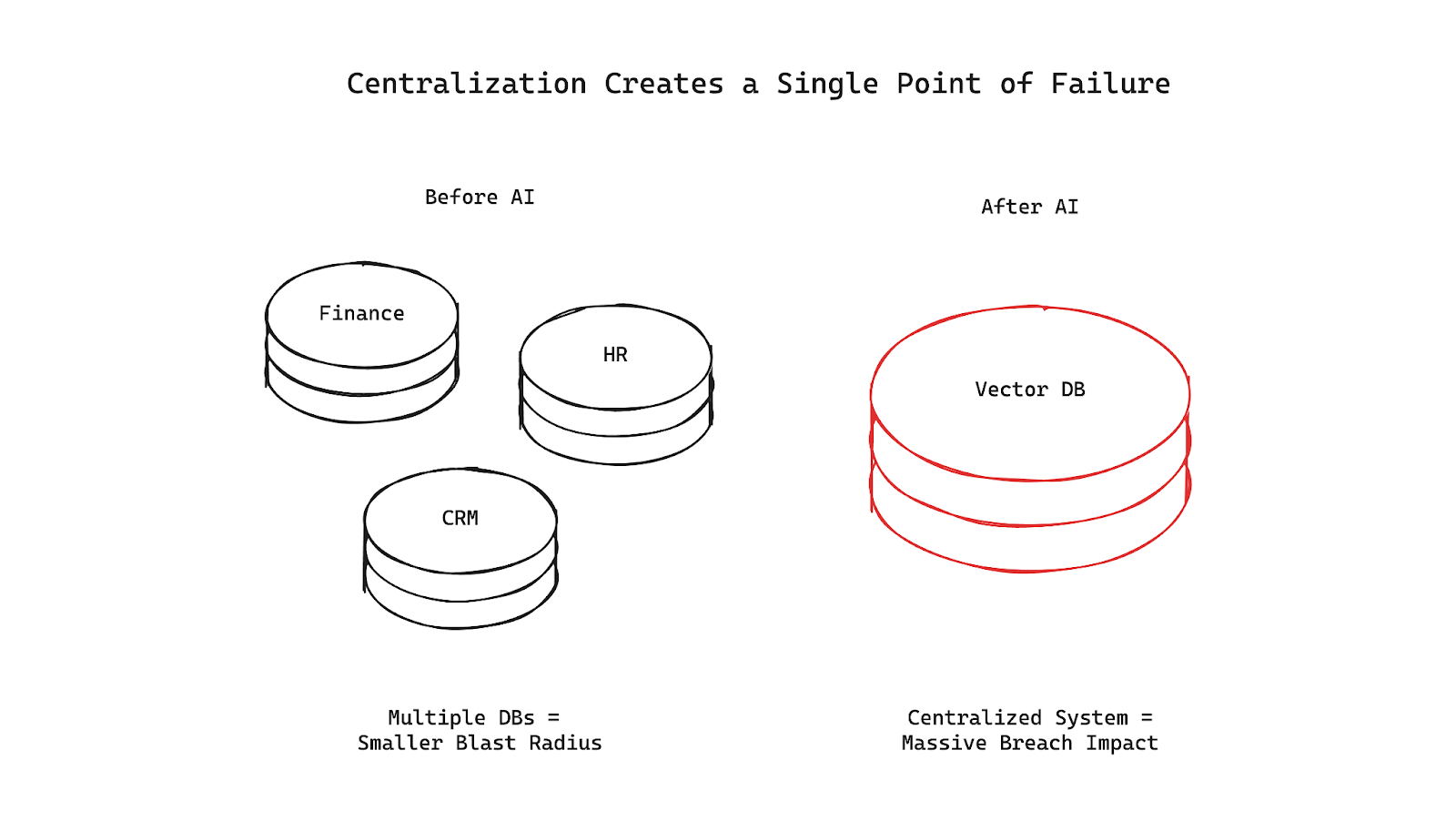

Before AI, enterprise data was fragmented. It lived across dozens - sometimes hundreds - of databases, file shares, SaaS tools, and silos. That fragmentation frustrated CIOs and CTOs who wanted faster insights, but from a security standpoint, it wasn’t all that bad.

An attacker looking to get a full picture of your organization’s knowledge had the challenge of breaching multiple systems, each with its own authentication, network controls, and audit trails. It was a grind, and that friction was on your side.

AI changes that.

Centralization: AI’s Most Overlooked Risk

Most enterprise AI applications - RAG or otherwise - work better with more data at their disposal. To make that possible, teams are centralizing data from disparate systems into a single vector database. This is required to give an LLM context-rich answers, avoid hallucinations, and unlock value from all the documents, tickets, wiki pages, and PDFs scattered across the company.

But here’s the security problem nobody’s talking about:

For the first time, there’s a single system that contains a dense, high-value cross-section of your entire organization’s data. Breach that system, and you don’t just lose one database - you potentially lose everything.

Why This Is Worse Than a Normal Database Breach

1. The blast radius is bigger.

A traditional database breach exposes the data inside that database. A vector database breach exposes the data from every source system connected to it. Centralization multiplies the impact.

2. Embeddings leak the original data.

Vector embeddings are dense mathematical representations of text or images. They’re not “anonymous” or “irreversible.” In fact, inversion attacks can reconstruct original content from embeddings with surprising accuracy. Cyborg recently demonstrated this live at the Confidential Computing Summit in June 2025 - watch the attack here.

3. Standard vector databases are almost entirely unprotected.

Most offer no encryption at all. A few encrypt at rest, but almost none encrypt in use. That means embeddings and metadata sit in plaintext in memory, in caches, in query logs - exactly where attackers look first.

This Isn’t Theoretical

If you’ve followed the security industry for any length of time, you’ve seen the headlines:

Those were bad. But in every case, the attacker got only one dataset.

A compromised vector database in an AI application could expose dozens of datasets at once - and in a format that’s easy to misuse or reconstruct. The surface area is smaller, but the stakes are far higher.

What a Real Solution Looks Like

To eliminate this risk, a vector database needs to:

- Encrypt everything end-to-end - at rest, in transit, and in use - so no plaintext embeddings ever exist in the system.

- Isolate encryption keys with BYOK or HYOK, so you - not your vendor - control access.

- Maintain performance at scale so security doesn’t mean latency.

- Integrate with existing stacks (Postgres, Redis, cloud KMS, AI frameworks) without forcing architectural rewrites.

- Support compliance requirements like HIPAA, GDPR, and FedRAMP without bolt-ons or workarounds.

Anything less is inadequate for serious or long-term use.

Where Cyborg Fits

Cyborg has built the first and only vector database proxy that sits between your applications and your vector database, providing encryption-in-use for all vectorized data, secured with your keys, and powered by GPU-accelerated performance. Designed for broad compatibility with your existing datastores and AI frameworks, it’s a secure, high-speed foundation for centralized AI data - built into the architecture, not bolted on.

If you’re building or running enterprise AI applications, now is the time to think about this risk. The architecture shifts are already happening inside your organization. You can’t stop centralization - but you can make sure it doesn’t become your biggest breach vector.

Learn more about how Cyborg secure enterprise AI data →

For more technical details, check out our security docs.